Our position on AI

We believe AI, robotics, and digital money are rapidly converging toward something that looks very much like the kind of global control system Scripture warns us about—and it might be coming soon. If there were ever a set of tools that could efficiently control global commerce, speech, and movement, this would be it. We do not claim prophetic certainty that "this is it," but we do see it as a plausible, highly reasonable, and confident expectation in light of the patterns in the Word and the speed of recent developments.

At the same time, we believe YHWH is sovereign over history, that nothing surprises him, and that those who belong to Yeshua have no reason to live in panic or despair. Our desire is to speak honestly about the dangers, resist the rush toward concentrated "godlike" power, and still make careful use of limited, constrained tools that can help the body study Scripture and test everything.

God is our refuge and strength,

a very present help in trouble.

A living document

The AI landscape is changing so quickly that we expect to revisit and revise this content frequently. When major developments happen, we intend to update how we see them in light of Scripture and the larger prophetic storyline.

Who this page is for

- Brothers and sisters who are concerned about "AI" and are not sure if they should use the 119 Assistant at all.

- Secondarily, those who are more eschatology-minded and want to understand how we see AI fitting into broader prophetic expectations (Mark of the Beast, beast system, Greater Exodus, etc.).

On this page (tap to expand)

Why this page exists

People use the word "AI" to mean many different things. That confusion is part of why this page exists. We want to distinguish between the direction of travel (toward AGI/ASI and concentrated power) and narrow, constrained tools that can be used carefully within strict, transparent boundaries.

What we mean by "AI" (and why definitions matter)

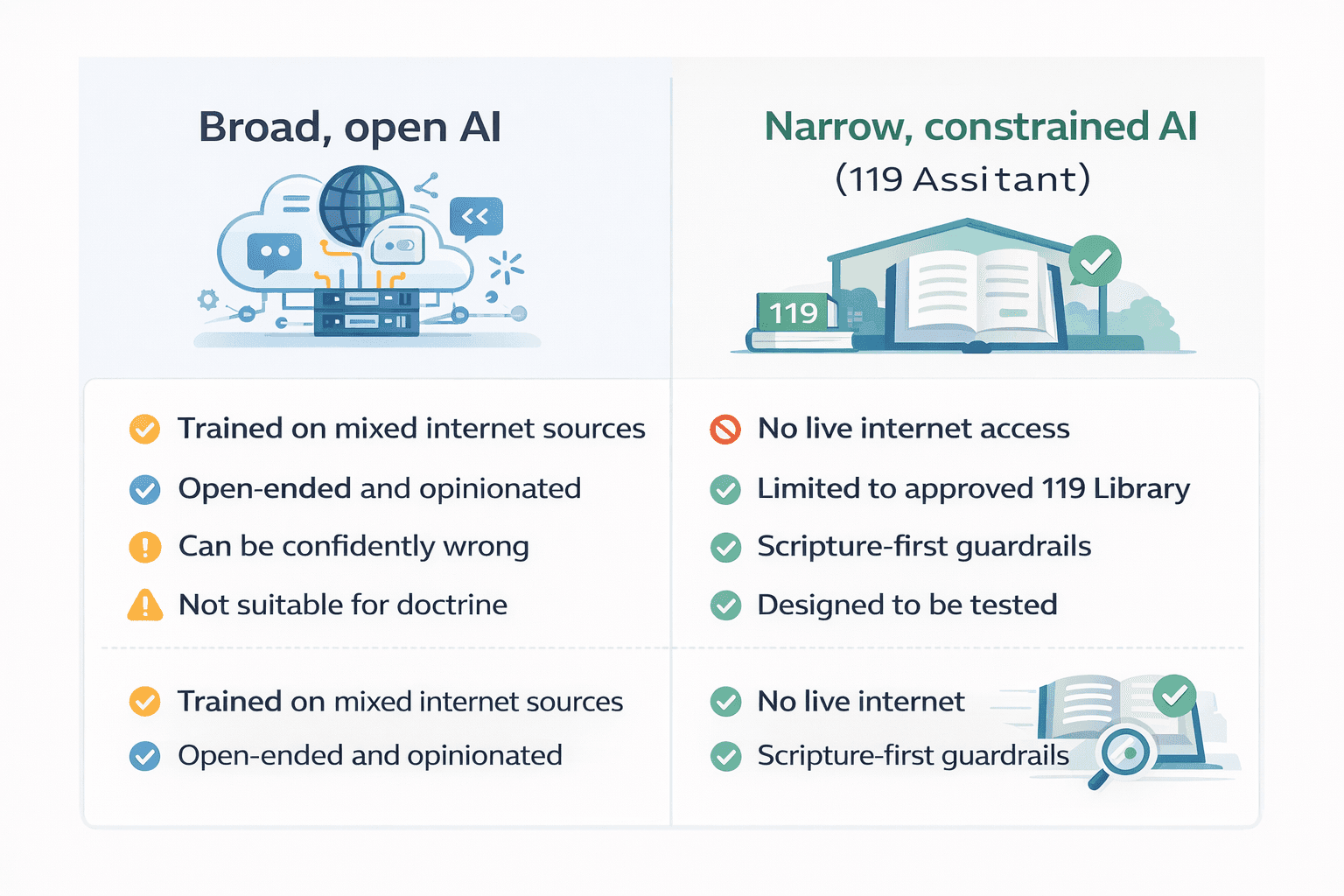

Broad, open AI (general consumer chatbots and platforms)

Examples: ChatGPT, Gemini, Claude, Grok, and many other tools built on large models trained on wide swaths of the internet.

Very roughly, these systems:

- Are trained on massive mixtures of web pages, books, code, and media.

- Are designed to answer almost any kind of question.

- Often sound confident even when they are wrong.

- Can be steered into opinions, novelty, speculation, and theological error.

- Are increasingly woven into search, browsers, operating systems, and workplace tools.

- In principle, are on a path that could evolve toward AGI (artificial general intelligence) and possibly ASI (artificial superintelligence).

Our recommendation

Because these systems are broad, open-ended, and heavily shaped by the worldview of their creators and the majority scholarship they are trained on, we do not recommend using broad open AI tools for doctrine, teaching, or forming conclusions.

They can sometimes be useful for more neutral work (for example, helping gather secular research for later review), but as a Bible study voice they are fundamentally unaccountable and are not coming from a Torah-observant perspective.

Narrow, constrained AI

By contrast, narrow AI is AI applied to a very specific task under tight guardrails. Examples:

- A tool that only searches a limited document library and summarizes what it finds.

- A spam filter.

- A transcription model that turns speech into text.

- A recommendation engine inside a single app.

Narrow systems still have risks, but they are much easier to reason about, audit, and constrain than broad, open-ended models.

Why this matters

We believe it is crucial to distinguish the direction of travel (AGI/ASI and concentrated power) from limited tools used within strict, transparent boundaries.

AGI (Artificial General Intelligence)

AGI is usually defined as an AI system that can match or surpass humans across most or all intellectual tasks: learning, reasoning, planning, problem-solving, and adapting to new domains.

Today, many of the people closest to these systems are talking about AGI as early as 2027–2030 if the current pace and trend continues:

- A survey of AI timelines has several leading AI lab founders and CEOs expecting AGI on roughly a five-year horizon.

- An ex-OpenAI researcher, Leopold Aschenbrenner, has publicly argued that AGI around 2027 is "strikingly plausible."

- Leaders at Google DeepMind have discussed AGI around 2030 as a serious, central scenario.

Others think these estimates are too aggressive, and some think AGI is farther off or may never fully arrive. There is real disagreement. But the center of gravity among many insiders has clearly shifted from "sometime this century" to "possibly by the late 2020s; if not, then soon after."

ASI (Artificial Superintelligence)

ASI refers to an intelligence that vastly exceeds human capability in virtually every domain—scientific creativity, strategic planning, persuasion, engineering, and more. Many AI risk researchers have argued that such systems could be extremely hard to control and could present catastrophic or even existential risks if misused or misaligned.

Key distinction

We believe it is crucial to distinguish the direction of travel (AGI/ASI and concentrated power) and the limited, narrow tools that can be used carefully within strict, transparent boundaries.

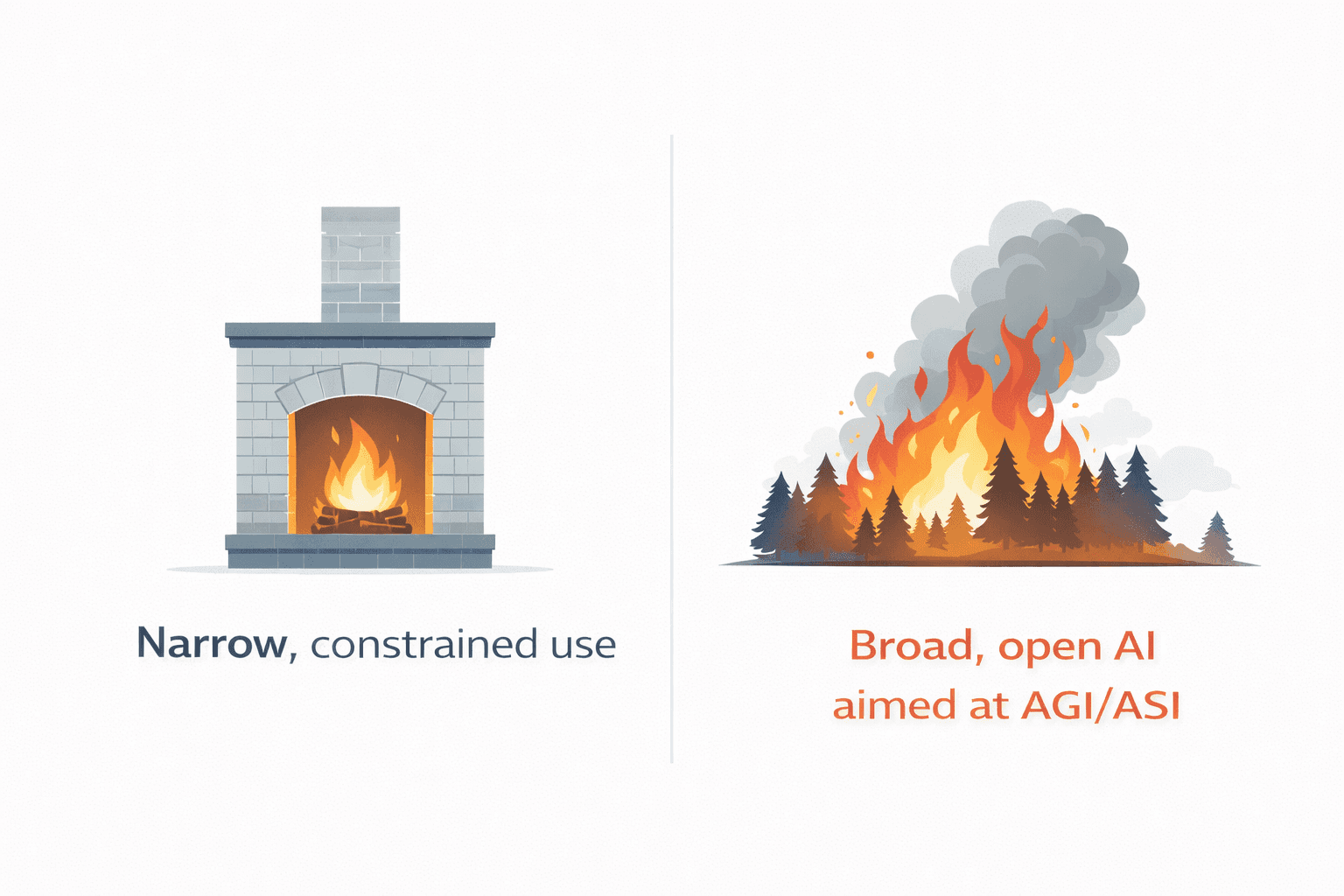

A simple picture: fire in a fireplace

For our God is a consuming fire.

We find the "fire" analogy very helpful.

Fire can be good

- Fire can warm a home, cook food, and keep people alive in winter.

Fire can be destructive

- Fire can also burn a house down and destroy an entire forest.

The right conclusion is not "fire is inherently evil." The right conclusion is:

- You keep it in a fireplace or stove.

- You build guardrails and chimneys.

- You monitor it.

- You never forget what it can do when it escapes containment.

That is our posture toward AI:

- Broad, open AI aimed at AGI/ASI is like building a global wildfire and hoping no one misuses it.

- Narrow, constrained AI used for Bible study with strict boundaries is closer to a small, carefully tended fire in a masonry fireplace.

Bottom line

We oppose building world-scale wildfires. We are willing, for now, to use very small, contained fires to help people study Scripture—if those "fires" are kept in a fireplace, under constant scrutiny, and never treated as an authority.

How fast is this actually moving?

One of the hardest things to communicate is just how quickly things are accelerating.

For decades, people used Moore's law as a mental model: chip performance roughly doubled every 18–24 months. But in the last decade:

- An analysis from OpenAI found that the compute used in the largest AI training runs was doubling roughly every 3.4 months between 2012 and 2018—far faster than Moore's law.

- Research from Epoch AI suggests that algorithmic improvements alone effectively double performance about every nine months, even if hardware stayed the same.

- Commentators have noted that model quality, hardware speed, and the number of chips deployed are each growing by factors of about 3–4× per year, which, when combined, can mean dozens of times improvement in effective capability every year in some domains.

In other words, we are not just talking about "twice as good every 18 months." In effect, we are seeing year-over-year improvements that can be on the order of tens of times more powerful, depending on the task, as better algorithms, bigger models, faster hardware, and larger deployments compound together.

We do not need to pick a precise number to see the pattern: this is not a slow, distant-future technology. It is accelerating now, and many of those closest to it are worried about where it could be by 2027–2030.

Why AGI/ASI could be a kind of "last invention"

Some thinkers have suggested that a true AGI that can improve its own algorithms and design new technologies could function as a "last invention"—because once you have it, it can invent almost everything else, faster and better than we can.

We view this as speculative but plausible:

- Current AI already accelerates coding, research, and design work.

- If systems reach the point where they can autonomously plan experiments, design materials, explore vast scientific and engineering spaces, and iterate both hardware and software at machine speeds, human-driven innovation stops being the bottleneck.

From a biblical worldview

- Humanity was told to "fill the earth and subdue it" (Genesis 1:28). Technology has always been part of that mandate.

- At the same time, the tower of Babel (Genesis 11) reminds us that human technological unity, when divorced from submission to YHWH, leads to pride and judgment.

We do not claim certainty on timing or details. But we see enough warning signs to treat AGI/ASI as a serious possibility in the near term—and a potential accelerant to a global system in which:

- No one buys or sells without permission.

- Truth is drowned in curated, synthetic media.

- Organized resistance to evil becomes nearly impossible.

That looks highly consistent with the kind of system described in Revelation 13. We cannot realistically expect a more "perfect" technical candidate than the kind of AI-driven infrastructure currently being pursued.

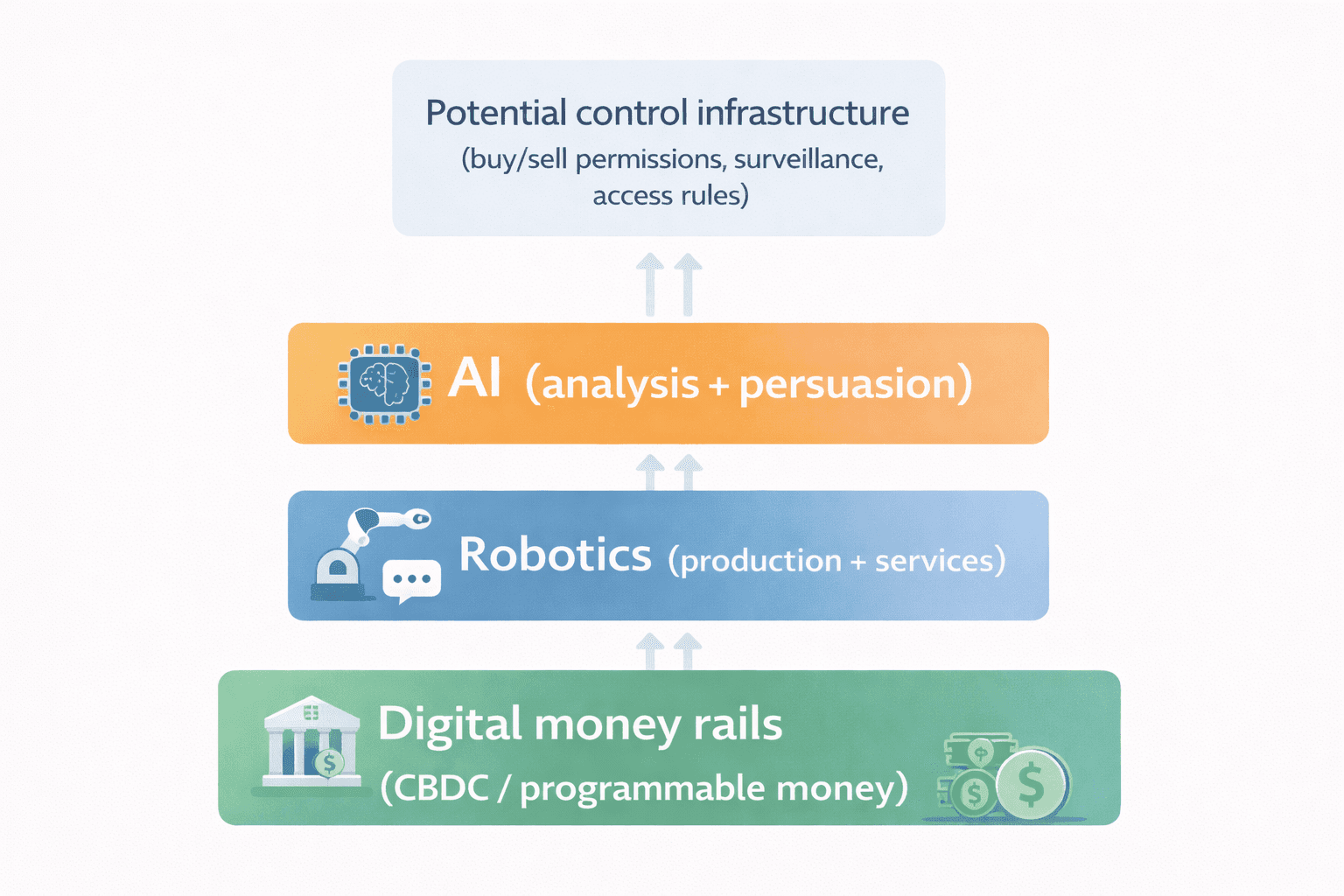

Robotics, abundance, and the concentration of control

One of the key reasons for our concern is the physical side of AI: robots and automation.

Major banks and industry analysts have projected that, over the coming decades, AI-enabled robots could number in the billions across industrial, service, and humanoid forms, potentially outnumbering the human workforce in many sectors. If even a significant portion of this vision becomes reality:

- Labor for many physical tasks approaches very low marginal cost.

- Production, logistics, agriculture, and services can operate 24/7 with relatively few humans.

- Those who own the AI + robotics infrastructure can provide goods and services at far lower cost than anyone else.

- Large numbers of human workers become economically "replaceable" in many industries.

Some see this as a path to "post-scarcity abundance." Others see a path to:

- Extreme dependence on whoever controls the robots and infrastructure.

- Massive job displacement and social instability.

- Pressure toward systems like "universal basic income" tied to compliance with certain rules.

We are not saying every forecast will come true, or that timelines are fixed. But the direction is clear: physical AI changes the economics of production and labor in ways that naturally centralize power.

Once production is centralized, whoever controls access to goods and services effectively controls survival.

The rich rules over the poor,

and the borrower is the slave of the lender.

Now imagine that dynamic at global scale, with AI-driven robots and programmable money instead of individual creditors.

CBDCs and programmable money rails

Many believers have heard the term CBDC but are not sure what it means or why it matters.

In simple terms:

- A central bank digital currency is a purely digital form of a nation's currency, issued directly by the country's central bank.

- It is like a digital banknote: instead of paper cash in your hand, you hold a digital balance that is a direct claim on the central bank.

CBDCs are typically discussed in two main forms:

- Retail CBDC: used by ordinary people and businesses for everyday payments.

- Wholesale CBDC: used only between banks and financial institutions for large settlements.

How is a CBDC similar to, and different from, something like Bitcoin?

| Topic | Bitcoin | CBDC |

|---|---|---|

| Who issues it? | Not issued by any state; created by a decentralized network. | Issued and guaranteed by a central bank as official national currency. |

| Who controls it? | Permissionless network; no single actor can unilaterally block a valid transaction. | Centralized system; authorities can set rules for how, when, and by whom it is used. |

| Identity & traceability | Public but pseudonymous; linking addresses to identities requires extra work. | Generally designed to be tied to verified identities, making spending traceability straightforward. |

| Programmability | Protocol rules are mostly fixed; no central authority can arbitrarily change what your coins can be spent on. | Often discussed as programmable money: rules can be enforced at the transaction or account level. |

Programmability and control

CBDCs are often discussed in terms of programmable money: the ability to enforce rules such as:

- "This benefit can only be used for groceries."

- "This account is frozen."

- "You have reached your monthly allowance for fuel or travel."

This does not mean every CBDC project will include all these controls, or that they will all be misused. But technically, CBDCs make highly granular control of buying and selling straightforward.

When we combine:

- AI-driven surveillance and analytics,

- AI-directed robotics and production, and

- CBDCs or similar programmable money rails,

we have a natural infrastructure for a system in which "no one can buy or sell unless he has the mark" (Revelation 13:16–17). We are not saying every CBDC is the mark of the beast. We are saying it is very easy to see how such technologies could become the supporting rails of that system.

Quantum, world models, and uncertainty

On top of all this, there are two more technical trends worth mentioning—not because we have prophetic certainty about them, but because they could speed up the patterns above.

World models and embodied AI

Many AI researchers believe that moving beyond static text and images toward "world models" —systems that build internal representations of the physical world and act within it—will be a key step toward more general intelligence. These models are increasingly being integrated with robots ("embodied AI"), enabling machines to learn tasks in simulation and then transfer them to the real world.

The technical details are still emerging, and there is disagreement about how far this approach can go. But the trajectory is clear: AI systems are being trained not just to talk about the world, but to act in it through physical devices.

Quantum computing as an accelerant

Quantum computing is still early, but governments and corporations see it as a possible multiplier for certain tasks: optimization, cryptography, simulation, and more. It is not a replacement for existing AI, but in some domains it may dramatically accelerate computation and analysis.

We want to be careful:

- Quantum computers today are limited and specialized.

- Many claimed speedups apply only to particular mathematical problems.

- There is significant hype and uncertainty.

Even so, there is a clear geopolitical race around "AI + quantum + data" as a combined strategic asset. We do not need to settle every technical detail to see the biblical pattern: more concentrated capability, more leverage, more temptation to build global systems of control.

Biblical concerns: discernment, idolatry, and deception

From a Torah-grounded, Messiah-centered worldview, the deepest issues are not technical; they are theological and moral.

1. Idolatry of human (and machine) power

Scripture consistently warns against trusting in:

- Horses and chariots (Psalm 20:7).

- Egypt's military power (Isaiah 31:1).

- The work of our own hands (Jeremiah 1:16; 17:5).

Today, we see similar temptations:

- To trust data, models, and systems to steer society.

- To believe that technology will solve sin, death, and injustice.

- To view human or machine "intelligence" as a kind of savior.

When we place ultimate hope in human-built systems—whether political, technological, or economic—we drift into idolatry. AI intensifies this by giving those systems unprecedented reach and apparent "wisdom."

2. Deception and lying wonders

Yeshua warned that in the last days, false messiahs and prophets would perform "great signs and wonders" to deceive, if possible, even the elect (Matthew 24:24). Paul wrote that the man of lawlessness would come "with all power and false signs and wonders, and with all wicked deception" (2 Thessalonians 2:9–10).

We do not know exactly how much of this will involve AI. But:

- Synthetic media can already mimic voices, faces, and events.

- Models can generate persuasive, context-aware content at scale.

- The boundary between reality and simulation is being blurred on purpose.

Whatever the exact mechanisms, we expect AI to be deeply involved in the "wicked deception" that Scripture anticipates.

3. The danger of replacing the Holy Spirit with algorithms

A subtle but real danger is treating AI tools as shortcuts for spiritual discernment:

- Letting a model tell us "what a passage means" instead of studying, praying, and testing.

- Treating AI as a kind of oracle, always ready with an answer.

- Using automation to replace relationship, community, and dependence on YHWH.

No software—no matter how impressive—can replace the Spirit of truth (John 16:13), the Scriptures themselves, or the gathered body of Messiah.

This is exactly why we frame the 119 Assistant as a tool to accelerate study, not replace it:

- Every response is saturated with Scripture and points you back into the text. On our site, verses are easy to inspect in context, so you can see whether the citations have been used faithfully.

- The Assistant's reasoning and logic are meant to be exposed and transparent. It is not an oracle; it is a study aid whose arguments you can follow, question, and test.

- If you find issues in the reasoning or the use of Scripture, we want to hear about them. We can then correct the underlying patterns and make the tool better for everyone.

In other words, the 119 Assistant is meant to be an accelerator for Berean-style study—never a replacement for the Spirit, the Scriptures, or the body.

A brief word on the Mark of the Beast and Greater Exodus

We will not fully restate our Mark of the Beast or Greater Exodus teachings here, but a few key points matter for this AI discussion.

The mark: allegiance, worship, and control

We understand the mark as fundamentally about allegiance and worship—who you serve, and whom you obey—not just about a technology or device.

Technology (AI, biometrics, CBDCs, implants, wearables, etc.) could easily become the infrastructure used to:

- Enforce that allegiance,

- Track compliance, and

- Control commerce.

The danger is not that a particular chip or wallet is "magical," but that people compromise in their allegiance to YHWH and Yeshua in order to participate in a controlled economic system.

From that perspective, the current push toward AI-driven surveillance, digital IDs, robotics, and programmable money looks like a highly likely candidate for the kind of infrastructure Revelation 13 describes. We do not say this with prophetic finality, but with sober confidence that believers should take this very seriously and refuse any system that requires disobedience to YHWH as the price of buying and selling.

The Greater Exodus: a second, greater deliverance

In our understanding, Egypt often pictures the nations and their oppressive systems. YHWH brought his people out of the oppression of Egypt in a mighty act of deliverance. Scripture also speaks of a future regathering and deliverance so great that it will overshadow the first Exodus (Jeremiah 16:14–15).

We believe:

- A greater oppression is coming under the Antichrist and the beast system.

- This oppression will be more total and technologically enforced than anything Pharaoh could have imagined.

- Just as YHWH delivered his people out of the oppression of Egypt, he will again deliver and protect his people out of a global system of oppression while he carries forward his plan.

Whatever form the end-times beast system takes—even if it uses AI, robotics, and programmable money—YHWH will not abandon those who fear him and keep his commandments by faith in Yeshua.

What believers should do: posture and practice

We cannot control the global AI race. But we can choose how we live in the midst of it.

1. Guard your heart and your hope

Some trust in chariots and some in horses,

but we trust in the name of YHWH our God.

- Do not put your hope in technological progress or in human resistance movements.

- Place your hope in YHWH's character, promises, and covenant faithfulness.

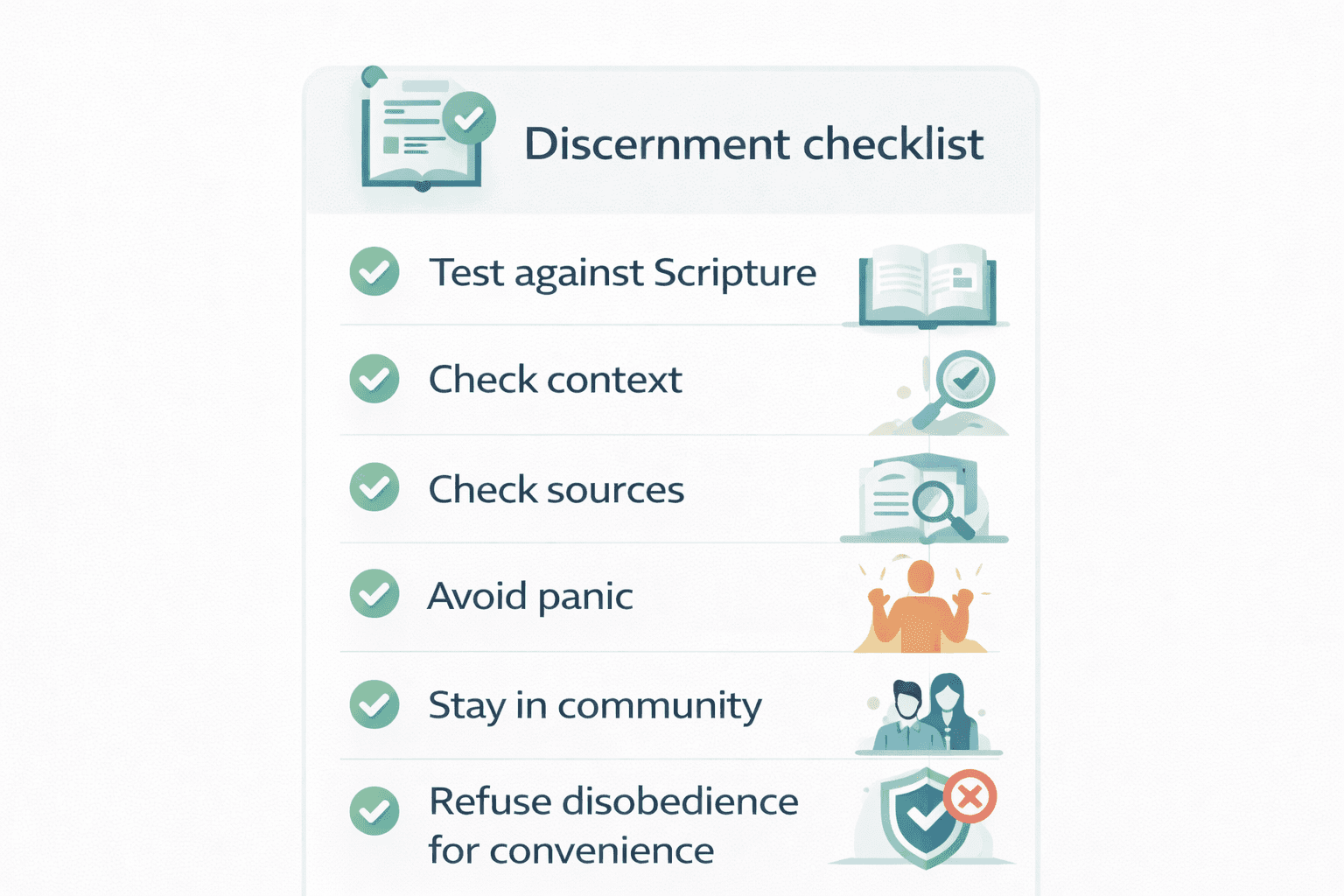

2. Practice discernment and sobriety

Test everything; hold fast what is good.

- Be slow to believe sensational AI promises and sensational AI fears.

- Check sources. Ask, "Who benefits if I believe this?"

- Be especially cautious about forming doctrine from any online source—human or AI.

3. Stay grounded in Scripture and community

- Read the Scriptures directly, in context.

- Pray, fast, and seek YHWH's face.

- Walk in obedience to his Torah by the power of the Spirit.

- Stay connected to a real, embodied community of believers.

4. Be cautious but not paralyzed

- We do not believe believers must avoid every use of software that involves machine learning.

- We should be extra cautious when tools touch truth, identity, or spiritual authority.

- We should prefer open, inspectable, auditable systems over opaque black boxes where possible.

- We should be ready to walk away from any tool if using it would require disobedience to YHWH.

5. Prepare for dependence and control pressures

- Be aware that future systems may pressure you to trade faithfulness for convenience, safety, or economic access.

- Where possible, reduce unnecessary dependence on centralized digital systems.

- Cultivate skills, relationships, and habits that make you less vulnerable to coercive technology.

- Above all, remember that Yeshua wins. Our goal is not to "beat" the beast system by clever tech, but to remain faithful witnesses, even under pressure.

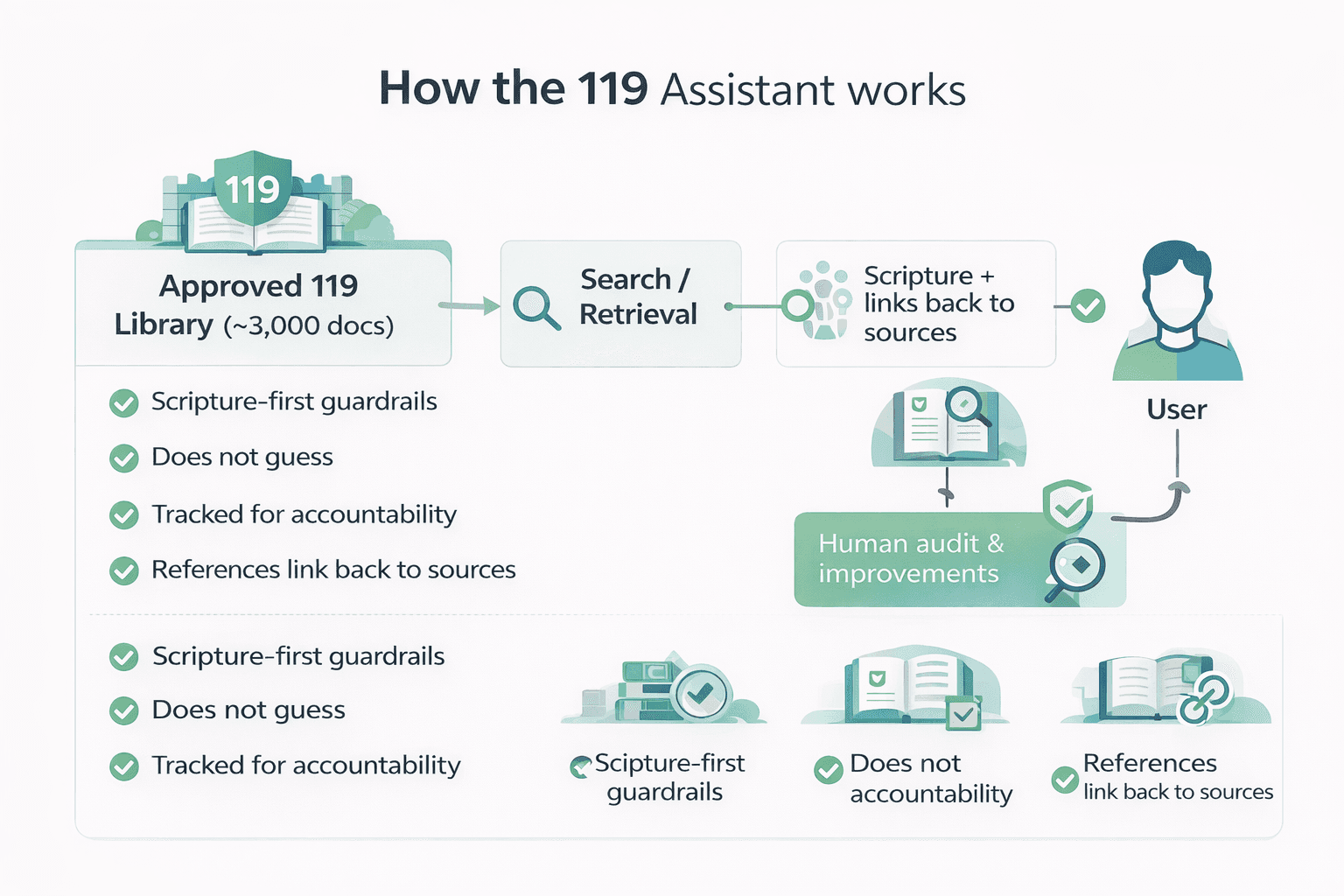

How the 119 Assistant is different from broad AI

Given everything above, why are we using any AI at all?

Because we believe there is a meaningful, defensible distinction between:

- Building or endorsing broad open AI and the push toward AGI/ASI, and

- Using narrow, constrained tools as a study aid within strict, transparent guardrails.

Here is how we have designed the 119 Assistant.

1. Narrow scope and constrained behavior

- It is a Bible study assistant, not a general chatbot and not a "Bible teacher."

- Its job is to help you search, organize, and summarize Scripture and our approved 119 teaching content—and then point you back to the sources so you can verify.

- It is explicitly instructed to avoid speculation, novelty, or "new doctrine."

2. No open internet browsing

- The 119 Assistant does not browse the live web.

- It is bound to a curated knowledgebase we control: nearly 3,000 internal documents that we created as our doctrine repository, consisting of our teachings and notes.

- This equips it to reteach our content in the way we actually teach it, instead of pulling from the chaos of the broader internet.

3. Scripture-first guardrails

- When Scripture and 119 content appear to conflict, Scripture is treated as primary.

- We continually test the system against the Bible and correct it when we find issues.

- The tool is meant to remain subordinate to Scripture, never above it.

4. Transparent about what it does not know

- If 119 has not taught on something, the Assistant is instructed to say so and avoid speculation.

- It is allowed (and encouraged) to say "We do not know" or "119 has not addressed this directly."

- As we discover topics where we have little or no published teaching, we are gradually adding carefully written notes that capture our current conclusions so that the Assistant can answer more consistently—always subject to revision as we continue to study.

5. Built and coded in-house, with human auditing and continuous improvement

- We built and integrated this tool ourselves, so we know how it works and where its limits are.

- Right now, every prompt and response is audited by a human, in an anonymous way, for one purpose: to improve the quality and faithfulness of the 119 Assistant's outputs.

- Only a small fraction of responses need adjustment, but when we find problems we:

- Patch the underlying behavior or data so it does better next time.

- Use those discoveries to expose gaps where we need more teaching or clarification.

- This is an ongoing process of continuous improvement. The goal is that the tool becomes more accurate, more consistent, and more aligned with Scripture and our published teachings over time.

What we are actually seeing: real-world fruit of the 119 Assistant

The concerns above are very real. At the same time, we are already seeing remarkable, practical benefits from this narrow, constrained use of AI.

Truly global, multi-language reach

- The Assistant can respond in virtually any major language on the planet.

- When someone asks a question in their own language about a topic we have already taught on, the Assistant can generate a well-formed, article-style answer that faithfully reflects how we teach it—only now in their language.

- This can happen for millions of people at the same time, in parallel.

Saturated in Scripture, pointing people back to the Word

- The Assistant is designed to load its answers with Scripture and to point people back to the passages in context.

- On our site, verses are easily viewable in context, so users can see the Word directly rather than relying on a bare paraphrase.

- Rather than creating shallow "hot takes," it is meant to drive people into their Bibles, not away from them.

People coming to Torah in real time

- We are already seeing people, in different languages, being led into Torah-observant faith in Yeshua through conversations with the 119 Assistant.

- We are seeing people strengthened in their faith, having long-standing questions answered, and being pointed into deeper study of the Word.

- What once would have required a slow, one-on-one process is now happening at a scale and speed that is hard to describe.

Custom, one-on-one teaching at scale

- Each user can receive a custom, article-style response tailored to their specific question, often within a couple of minutes.

- They can then ask follow-up questions, refine, and go deeper.

- This is not replacing discipleship or fellowship, but it is providing an unprecedented study accelerator that is available at any time, from anywhere.

Driving our own growth and clarity

Because we audit every response, the Assistant is constantly exposing areas where:

- Our existing materials could be clearer.

- We have gaps or have not yet taught on a subject.

We are using these insights to improve our own content, add notes where needed, and deepen our explanations.

We want to be careful not to over-state things, but from a ministry perspective, this may be one of the most powerful Bible study tools we have ever seen—when it stays within its narrow, Scripture-first boundaries.

How believers might use broad AI tools (and where we draw the line)

Because broad AI is already embedded in many tools people use every day, it may be helpful to draw a practical line.

We believe it can be reasonable, with discernment, to use broad AI tools for things like:

- Curating secular or technical research for later consideration and review.

- Summarizing long non-theological documents you already trust.

- Helping with neutral tasks like formatting, data extraction, or coding.

Where we draw the line

However, we strongly discourage using broad AI tools for:

- Establishing biblical doctrine.

- Asking for "options" on how to interpret Scripture and then treating those options as balanced.

- Debating or arguing doctrine, especially in areas where mainstream scholarship has already rejected the ongoing validity of YHWH's Torah.

Broad AI systems are trained mostly on dominant, mainstream sources. That means:

- They generally do not reason from a Torah-observant perspective.

- They naturally echo the same antinomian mistakes made by much of modern scholarship.

- Even if you try to "pin" a broad model into a long discussion, it tends to circle back to the same underlying assumptions rather than truly turning away from them.

For that reason, we do not use those kinds of models to build our own doctrine, and we do not recommend that believers lean on them for theological formation, doctrinal arguments, or spiritual guidance.

Why we can oppose AGI/ASI and still use narrow AI tools

Some may see a contradiction:

"If you think AGI/ASI is dangerous and beast-system adjacent, why use AI at all?"

We see it this way:

- We oppose the destination: a world where a handful of actors wield godlike machine power over everyone else.

- We acknowledge the reality: narrow AI is already embedded in many aspects of life (phones, search, spam filters, etc.).

- We choose to use limited, auditable, Scripture-subordinate tools—for now—in a way we believe can serve the body and help people test everything.

We can, in good conscience:

- Warn loudly about the dangers of where this is going.

- Advocate against the rush toward AGI/ASI and concentrated digital control.

- And still run a tightly controlled, non-browsing, Scripture-first Bible study assistant for as long as that remains possible and beneficial.

In summary

- We believe AI, robotics, and digital money are converging into an infrastructure that looks highly likely to be involved in the end-times beast system Scripture warns us about, and that it may be coming soon.

- We oppose the pursuit of AGI/ASI that would centralize "godlike" power into the hands of a small group.

- We believe the pace of progress makes AGI in the late 2020s a serious possibility, not a distant abstraction.

- We see AI-driven deception and programmable money as fitting neatly into biblical warnings about end-times deception and commerce control.

- We call believers to discernment, sobriety, and deeper dependence on YHWH—not on algorithms.

- We use the 119 Assistant as a narrow, constrained, in-house, Scripture-first Bible study tool, and we insist that it remain a starting point, never an authority.

- We are already seeing real fruit: people coming to Torah, being strengthened, and being driven back into the Scriptures in their own languages, at a scale we have never seen before.

- Our heart is to help the body of Messiah love YHWH, walk in his ways, and be ready for whatever comes—without fear, without naivety, and without bowing to the idols of our age.

Selected sources (for those who want to dig deeper)

These are not endorsements of every conclusion in these works, but examples of sources discussing the timelines and dynamics described above.

- "AI 2027 Timeline Survey." AI 2027, 2024.

- Aschenbrenner, Leopold. "Situational Awareness: The Decade Ahead." LessWrong, 2024.

- Heath, Alex. "DeepMind Co-Founder on AGI by 2030." Axios, 2023.

- Amodei, Dario, et al. "AI and Compute." OpenAI, 2018.

- Sevilla, Jaime, et al. "Trends in Machine Learning Algorithmic Progress." Epoch AI, 2022.

- Person, Jeremy. "AI Progress: Why Things Feel Like They're Moving So Fast." 2023.

- "Risks from Advanced AI." 80,000 Hours, multiple articles and interviews, 2017–2024.

Have questions?

If you are new to the 119 Assistant, start with How It Works. If something seems off, test it against Scripture, then contact support from the site menu with your prompt and the excerpt that concerns you.